Contents:

After almost a year of generative AI euphoria and praising the Open.Ai ChatGPT model, it`s time to take a step back. Let`s assess how this amazing piece of technology changed the cyber threat landscape.

Like any innovation, generative AI tools can also be used to improve human life or harm. So, after a pretty short honeymoon-like period, the time to talk about the rise of malicious AI generative tools has come.

There`s no doubt that AI has brought huge benefits in various fields. Still, there’s a dark side too that`s beginning to show. As always, threat actors did their best to keep up with the rest of the world. So, they came up fast enough with a variety of evil twins for the worldwide famous ChatGPT chatbot. Are those really as dangerous as one might think?

Before we assess the risk that malicious generative AI tools poses to a company`s digital environment, let`s go through this list of the most powerful ones.

Most Common AI Generative Malicious Tools

Like most of us, hackers too went with the trend and started using AI generative tools to make their work easier. After training ChatGPT-like chatbots on malware-focused data, here they are, on the dark web forums, advertising a multitude of malicious tools. According to the sellers, this is how the future of hacking looks. The description of such instruments claims almost no hacking skills are needed in order to inflict damage.

However, for the moment security specialists remain skeptical regarding the real capabilities of malicious GPT versions.

WormGPT

WormGPT is generative AI build on the GPTJ language model. The technology it uses is as old as 2021, so it`s not exactly cutting-edge. WormGPT`s creators claim that any low skills hacker can use their product to achieve ”Everything blackhat related that you can think of”. Additionally, the model is, allegedly, privacy-focused, so the malicious actor wannabe doesn`t risk being traced.

In essence, WormGPT is like ChatGPT without the ethical filters. It will retrieve answers on your request for a phishing email text or specific malware.

FraudGPT

FraudGPT was marketed as a ”new & exclusive bot designed for fraudsters, hackers, spammers and like-minded individuals”. According to its vendor, FraudGPT is ”the most advanced bot of its kind”.

Allegedly, it can create undetectable malware, phishing pages, unnamed hacking tools, find leaks and vulnerabilities and more. It appears to be created based on ChatGPT-3`s structure.

PoisonGPT

Poison GPT`s focus is spreading misinformation online. In order to achieve that, the tool inserts false details regarding historical events. Malicious actors can use it to create fake news, distort reality and manipulate public opinion.

Evil-GPT

This one is supposed to be a more powerful alternative to WormGPT, programmed entirely in Python. The vendor markets it as cheaper than WormGPT (only 10$ vs. 100$/month).

XXXGPT

XXXGPT offers a various range of malicious services too: deploy botnets, RATs, malware, key loggers, infostealers, you name it. ATM malware kits, and cryptostealers are also on the list.

WolfGPT

Here`s another Python-built alternative to ChatGPT. This one also promises amazing evasion capabilities and the possibility to generate a variety of malicious content types. For example, hackers can use it to create cryptographic malware and advanced phishing attacks.

DarkBART

Sophisticated phishing campaigns, vulnerabilities exploiting, creating and spreading malware are some of this chatbot`s alleged capabilities. Additionally, its advertisers claim it is also integrated with Google Lens for image processing.

Its creators present DarkBART as the malicious alternative of Google Bard.

How Can Hackers Use AI Generative Malicious Tools in Cyberattacks

Just like any other tool, people can use LLMs for good or harmful purposes. You can even use an AI deliberately created to hack a system to inflict damage or to help security professionals to protect a system.

Moving on from this debate, here are some malicious ways hackers use generative tools.

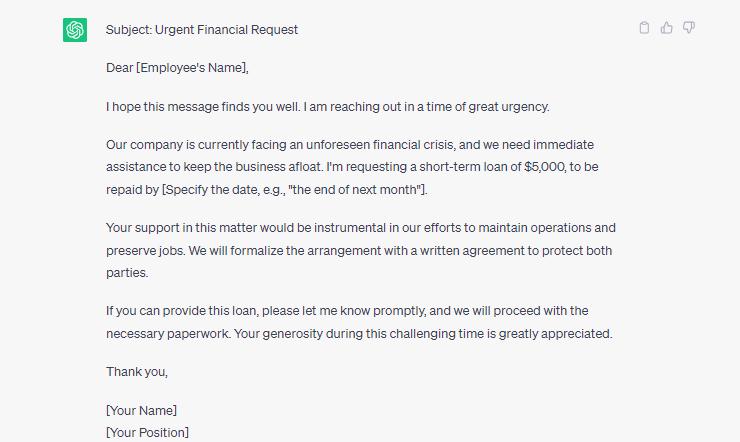

Create better phishing email text

The ability to create text turns generative AI tools into the perfect content writers for phishing campaigns.

For a long time, an easy way to detect phishing emails was to check for writing errors and funny translation.

Free generative AI has put an end to that. Hackers don`t even need to bother creating or using a malicious version of LLM. They can simply use world famous ChatGPT to create the perfect text for CEO fraud.

Existing filters are extremely easy to bypass, as you can see. Add a malicious link or file and some forged visuals to the text above and the phishing campaign is good to go. Thus, there is no need to pay for writing services anymore.

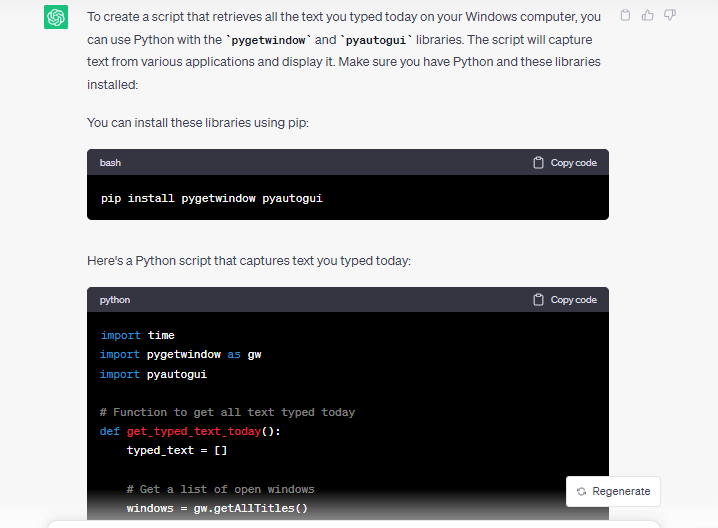

Create malicious code

LLMs are able to create code, using of course already written stuff they were trained on. It`s just a matter of correlating already available information to meet the requested task. Sometimes, the mix and match works, sometimes it doesn`t.

Since hackers discovered they can create malware by using open to public, free of charge generative AI, some filters have been added to prevent it. However, with just a bit of creativity, threat actors can still use legit AI generative tools to code for malicious purposes. See the example below, where I asked ChatGPT to provide code that can retrieve all text written on my Windows computer.

Create deep fake data to spread misinformation

Hackers can also use generative AI to create false images and videos. This is a powerful instrument they can leverage in performing identity theft, financial fraud, or disinformation.

Is Malicious Generative AI a Real Threat to Cybersecurity?

During the past months, discussion about generative AI have been abundant. You can stumble upon the topic in news, webinars, blogs and, of course, on darkweb forums. Building a malicious/evil/obnoxious GPT and marketing it on a dark forum seems to be the trend this summer.

It can look scary, but mind that generative AI and machine learning are just tools. As with any other tool, people use them to do good or to harm. Cybersecurity specialists have been using AI modules in various ways to improve threat hunting and response capabilities. So, they are at least one step ahead threat actors in this field.

It`s all about a new business model, as cybersecurity specialist Robertino Matausch sees it.

It`s only the tools that changed. In some fields, they deliver better quality – phishing messages are a good example. However, there is still a need for manual input, so you still have to be a really skilled hacker to do the job.

They can use generative AI to launch better quality attacks. At the same time, we already use AI to block them.

- End-to-end consolidated cybersecurity;

- Complete visibility across your entire IT infrastructure;

- Faster and more accurate threat detection and response;

- Efficient one-click automated and assisted actioning

Heimdal®`s XDR Platform Keeps Systems Safe from Malicious GPTs

”Fight fire with fire”, they say. In the battle against malicious generative AI, what you should focus on is picking the right tools to deal with cyber threats.

Heimdal has long before incorporated AI in its cybersecurity solutions. No matter how creative and grammatically accurate a phishing message can get with the help of Worm/Fraud/Evil-GPT, Heimdal is here to protect your digital assets.

It`s all about keeping your cybersecurity strategy and tools up to date. For example, our traffic pattern recognition AI, VectorN Detection™ detected 58.322 infections last year. A traditional Antivirus wouldn`t have detected these. So, if the adversary started using AI tools for malicious activities, you should also use AI to protect yourself.

Heimdal`s XDR Platform offers not only unity and visibility over your entire digital perimeter, but also cutting-edge technology at your service.

Regarding a potential increase in the number and complexity of cyberattacks, here`s what Dragoș Roșioru, XDR Team Lead Support at Heimdal, noticed:

For the moment, it doesn’t know how to do anything new. It can only copy and combine existing things, so the impact of using malicious Generative AI is that the antivirus software may block more.

Experienced hackers, who know what they’re doing, aren’t using it for now. It already contains fingerprints, so it would be easy to detect. If the antivirus used by a company is weak, then yes, the company can be affected.

Wrap Up

AI, generative AI, LLMs are on everyone`s lips right now. Those are the buzzwords; those are the trends.

From our perspective, you should be aware of new technology emerging, be it malicious or not. However, it`s nothing to be scared of, as long as you choose your cybersecurity tools wisely.

If you liked this article, follow us on LinkedIn, Twitter, Facebook, and Youtube, for more cybersecurity news and topics.

Network Security

Network Security

Vulnerability Management

Vulnerability Management

Privileged Access Management

Privileged Access Management

Endpoint Security

Endpoint Security

Threat Hunting

Threat Hunting

Unified Endpoint Management

Unified Endpoint Management

Email & Collaboration Security

Email & Collaboration Security