Contents:

The rise of GenAI (Generative AI) gives leeway to malicious content creators with 80% of all phishing campaigns discovered in the wild being generated by AI tools such as ChatGPT or similar.

In this article, we are going to explore the latest phishing techniques that capitalize on GenAI.

A new milestone in phishing

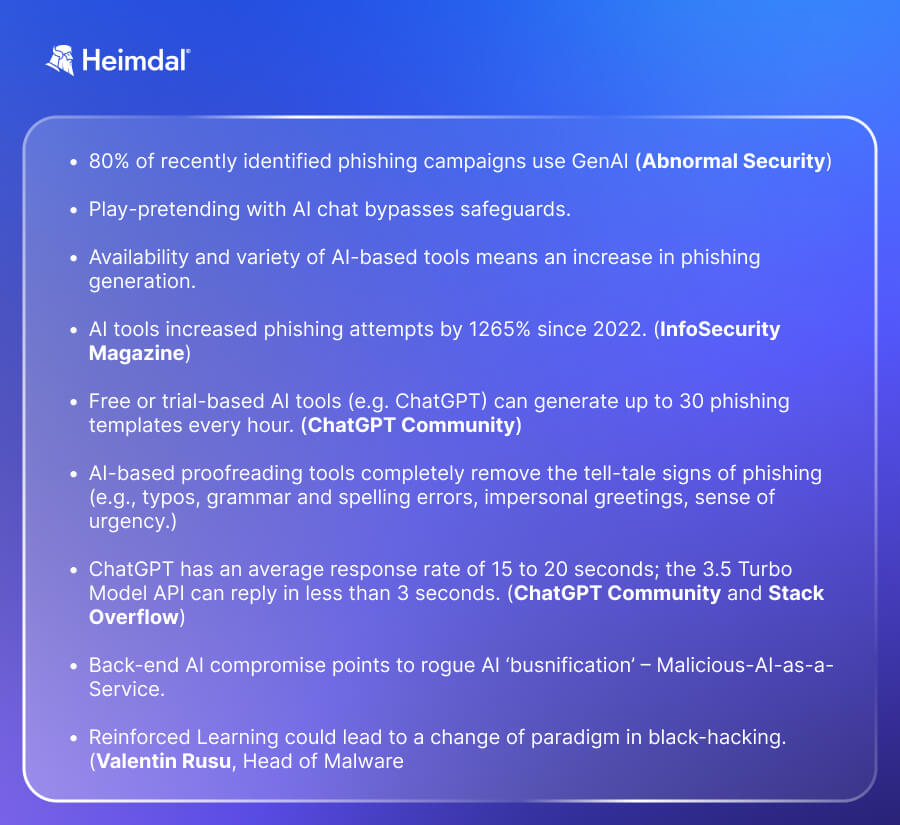

Why is phishing still a reason for concern? Check out these stats.

- 80% of recently identified phishing campaigns use GenAI (Abnormal Security)

- Play-pretending with AI chat bypasses safeguards.

- Availability and variety of AI-based tools means an increase in phishing generation.

- AI tools increased phishing attempts by 1265% since 2022. (InfoSecurity Magazine)

- Free or trial-based AI tools (e.g. ChatGPT) can generate up to 30 phishing templates every hour. (ChatGPT Community)

- AI-based proofreading tools completely remove the tell-tale signs of phishing (e.g., typos, grammar and spelling errors, impersonal greetings, sense of urgency.)

- ChatGPT has an average response rate of 15 to 20 seconds; the 3.5 Turbo Model API can reply in less than 3 seconds. (ChatGPT Community and Stack Overflow)

- Back-end AI compromise points to rogue AI ‘busnification’ – Malicious-AI-as-a-Service.

- Reinforced Learning could lead to a change of paradigm in black-hacking. (Valentin Rusu, Head of Malware Research and Analysis Team).

Here’s an infographic of the above stats, in case you want to save and share.

Recent phishing samples retrieved from community-powered databases such as PhishTank show little to no traces of the signs usually associated with phishing-type content:

- domain spinning;

- grammar & spelling errors;

- suspicious or unexpected attachments;

- a sense of urgency.

On this, Marlena-Adelina Deaconu, Heimdal®’s MXDR (SOC) Team Lead, said that:

(…) the rise of GenAI has indeed contributed to an increase in the quality and variety of phishing emails, making them more difficult to detect.

I’m especially worried about how it can now analyze and exploit personal vulnerabilities and emotions, making the emails seem more convincing.

We experienced a Business Email Compromise (BEC) attempt where the scammer tried to impersonate our CEO. More information about this can be found here.

The process itself can be sped up by using dedicated, AI-powered paraphrasing tools.

For instance, Quillbot, the go-to solution for overcoming writer’s block or respining content, can help the user simplify texts, eliminate grammar issues & typos, and substantially improve the material’s overall quality.

This severely hampers any detection and remediation efforts on the defender’s side.

The high velocity in AI adoption, opens up new horizons for developers, as well as MSPs.

At the end of 2023, a new milestone was set in terms of AI, with over 10,000 tools having been rolled out to serve various needs.

Phishing activity increased by more than 1000% since the launch of ChatGPT in November 2022, with forecasting showing a linear trend for the 2023-2024 interval.

Threat actors are no longer required to manually craft phishing email or to outsource.

Free AI tools can now be used to generate malicious content.

Although solutions such as ChatGPT have a protection mechanism that bar users from using the software for nefarious purposes, role-playing can always be used to bypass the regular security mechanisms.

Valentin Rusu, Heimdal®’s Head of Malware Research and Analysis Team stress-tested this theory and supplied the following example.

Any threat actor can generate at least 30 viable phishing samples per hour via role-playing.

For instance, if the regular user would open up the free version of ChatGPT and ask it to create some phishing email templates by impersonating a white-hat hacker, the engine will begin to generate the samples.

In the context of rogue AI(s), Reinforced Learning can increase the effectiveness of adversarial activity. Valentin Rusu noted that:

I am more scared about another subject, that no one talks about and it was not exploited yet.

This subject is Reinforcement Learning, and is the capability of an agent to learn by itself, only by giving it the objective. For example, you can tell the agent the rules of chess and the object: check mate.

From this point, the agent will play all the possible scenarios and it will become the best grandmaster ever exists. I will give you another example, OpenAI trained a bot for 1 million years of playing Dota 2. Imagine a human being that played a game for 1 million years, would someone be able to beat him?

Now, let’s move those examples in cybersecurity. Imagine you are a hacker and you can reproduce the existing security systems. What would happen if you train an agent to break this system through trial and error?

This is what I am scared off, that there will be a hacker that will succeed that it will break the internet.

Rogue AI in Phishing

There are many advantages to using AI tools for crafting malicious content. This includes:

- Higher quality content that’s harder to detect by traditional cyber-security software.

- AI-generated phishing emails fall below the character requirement limit. Recent studies show that phishing emails that are under the 250-character meeting will fall below the detection threshold of LLM-powered AIs. (InfoSecurity Magazine)

- Higher content generation velocity. ChatGPT 3.x has a 30 messages per hour cap, while the latest model boasts a 40 messages per hour cap. Even with these limitations in place, threat actors could generate between 720 – 960 phishing email templates within 24 hours.

- Rolling towards a Malicious-AI-as-a-Service model. Rogue AI busnification is months away considering the trends. With open-source AI (e.g. PyTorch) available, setting up a pay-to-play or subscription-based platform is only a matter of time.

Extra Resources

For additional info, you can check out these useful stats.

- 83% of companies prioritize AI over any other type of technology. (Notta AI)

- 51% of businesses depend on AI for threat detection and remediation. (EFT Sure)

- One in five people will open AI-generated phishing emails. (SoSafe Awareness)

- 69% of organizations stated that they could not avert cyber-attacks without AI. (CapGemini)

- ChatGPT has an average response rate of 15-20 seconds.

- ChatGPT 3.5 Turbo Model API can generate a reply in less than 3 seconds.

If you liked this article, follow us on LinkedIn, Twitter, Facebook, and Youtube, for more cybersecurity news and topics.

Network Security

Network Security

Vulnerability Management

Vulnerability Management

Privileged Access Management

Privileged Access Management

Endpoint Security

Endpoint Security

Threat Hunting

Threat Hunting

Unified Endpoint Management

Unified Endpoint Management

Email & Collaboration Security

Email & Collaboration Security